New!

NetoAI Partner of AWS for Telecom Specific LLM →

NetoAI Frontier Models: Building the Future of Enterprise Intelligence

From the industry’s deep reasoning to decisive action, from static text to human-like voice – step into the breakthroughs shaping the future of AI.

Trusted by visionary

global partners

AI-Powered Enterprise Transformation

An ecosystem of pretrained industry models – spanning LLMs, SLMs, ML, and voice – to fast-track AI innovation into business outcomes

TSLAM

The world’s first telecom-specific Large Language Model, establishes a new industry benchmark by delivering Telecom SME Intelligence with precise reasoning and contextual intelligence at scale across customer and network life cycle

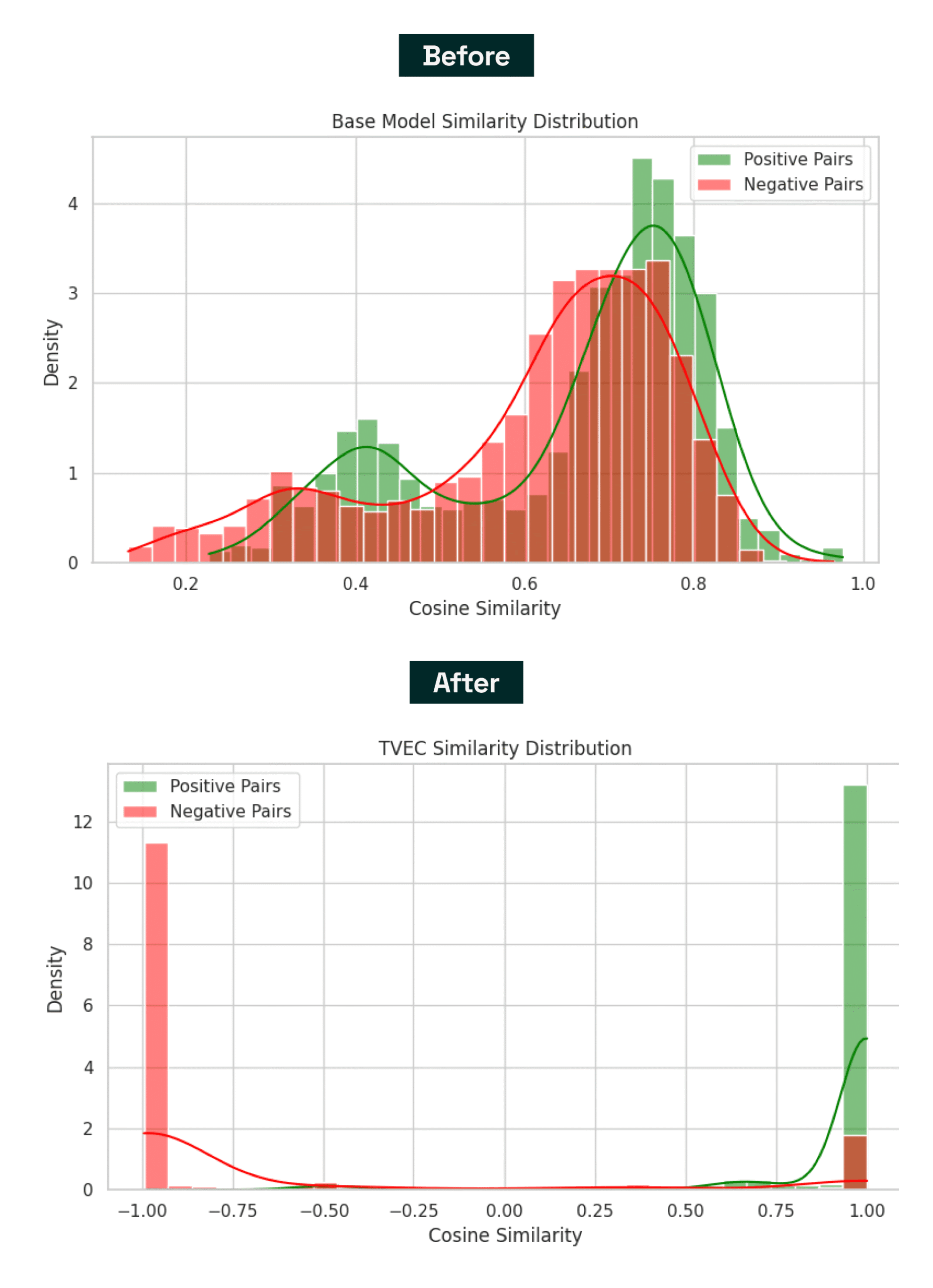

T-VEC

The World’s first Telecom-trained vectorization model that understands industry jargon and nuances, with 93% accuracy, enabling precise retrieval, advanced correlation, and enterprise-grade decision support

Ethical AI

Embedding trust and governance into the AI fabric through a structured framework of 7 categories and 11 sub-categories to ensure fairness, accountability, and responsible deployment remain integral to every conversation

ML Hub

Leveraging 20+ machine learning models to drive predictive and adaptive capabilities, enabling organizations to forecast, optimize, and automate operations with scalability, precision, and transparency

Voice Model

Elevating Human-like conversation through T-Synth TTS, engineered for immediacy, clarity, and multilingual reach. The Enterprise ready voice agent provides frictionless and contextual experiences with sub-600 ms latency

Scalable, Cost-Effective, Enterprise Ready

Accelerate your business with industry-specific LLMs (TSLAM). Get smarter outcomes at lower cost and enhanced sustainability—all while maintaining complete control over your data. Our flexible deployment options, from on-premise to the edge, ensure you meet all security and compliance requirements.

Open Source on Hugging Face

Oct ’25

TSLAM – 4B

For Edge Computing – Retrieve runbooks and telemetry,

ground responses with RAG.

Request Access →

Mar ’25

TSLAM -1.5B

For Laptop & Desktop – Triage tickets, extract intents,

summarize diagnostics.

Request Access →

July ’25

TSLAM – Mini 2B

For Laptop & Desktop – Assist field ops with checklists,

commands, context.

Request Access →

Enterprise Models

May ’25

TSLAM – 8B

For Cloud & On-prem (GPU Infra) – Operate agent

workflows, call tools, enforce policies.

Request Access →

Aug ’25

TSLAM – 18B

For Cloud & On-prem (GPU Infra) – Reason across layers;

propose root cause and remediations.

Request Access →

What Makes Our Models Different

Cross-Domain Telecom Reasoning

Delivers reasoning across customer and network life cycle – with deep knowledge of vendor specifications, industry standards, configurations, and regulatory requirements.

Enterprise-Grade Governance

Policy guardrails, RBAC, and audit trails – plus evidence-backed safety, bias, and robustness checks – for security, compliant, transparent, trustworthy AI by design.

Proven Domain Accuracy

Validated at ~93% domain intelligence on telco workflows and terminology, outperforming general-purpose LLMs in operator contexts.

Low-Latency, Efficient Inference

Optimized decoding and quantization enable real-time agent conversationwith high throughput – even on constrained compute.

Cloud Ready, On-Prem Capable

Deploy on-prem, at the edge, on mobile, or across private and public clouds – built for portability, control, and compliance.

Multi-modal & Multi Lingual Capability

Built with multi-modal understanding and multi-lingual fluency, our models interpret customer needs across voice, text, and documents – powering seamless interactions

Model Que

Model Que transforms a generic base LLM into an industry-specific powerhouse. It automatically converts any type of document – PDFs, reports, manuals, or logs into a structured query-answer dataset for fine-tuning. With built-in optimization capabilities, Model Que ensures the adapted LLM is not only domain-trained but also efficient, accurate, and ready for real-world deployment.

Faster Fine-tuning from months to weeks

%

Reduction in Model Fine-tuning cost

%

Coverage on Domain, Ethical & Technical Benchmarking

T-VEC: Telecom-Specific Embedding Model

Deeply fine-tuned with a triplet-loss objective on curated telco corpora, it is the first open-source telecom tokenizer and leads benchmarks (MTEB avg 0.825; 0.938 on an internal telecom triplet test vs <0.07 for generic models).

%

AI Accuracy in Telecom Domain

%

Hallucination Reduction - RAG Faithfulness Improvement

%

Pass Rate Across Policy Governance and Security Checks

Predictor

Predictor simplifies the journey from raw data to powerful insights. It streamlines the entire ML lifecycle – from data pre-processing and visualisation to model selection, training, and evaluation. With built-in accuracy tracking and deployment capabilities, Predictor can create, optimise, and scale ML models for actionable intelligence.

Faster Fine-tuning from months to weeks

%

Reduction in Model Fine-tuning cost

%

Coverage on Domain, Ethical & Technical Benchmarking

AI Models Trained Across Customer and Network Life Cycle

20+ Models Supporting Forecasting & Prediction across Sales, Marketing, Billing and Network

Network Alarm Management

- Alarm Correlation

- Network Outage Prediction

- AI driven Root Cause Analysis

Troubleshoot Network Faults

- Network Fault Prediction

- Guided Fault Troubleshooting

- Closed Loop Automation

Network Planning

- Capacity Forecasting

- Automated Plan and Build

- Digital Twin - Simulation

Sales & Marketing

- Personalisation Model

- Sales Forecast Model

- Recommendation Engine

- Lead Scoring

- Dynamic Pricing Model

Intelligent Customer Service

- Customer reliability analysis

- Sentiment Analysis

- Customer Lifetime Value Analysis

- Churn Prediction

- Customer Feedback Analysis

Service Delivery

- Intent-driven Service Fulfilment

- Automated Optimal Path Selection

- Quote to Order Conversion Assistant

Next-Gen Voice AI for Human-Centric Enterprise Conversations

NetoAI’s Voice AI Agents redefine enterprise engagement with low-latency, multilingual, and human-centric interactions – built for effortless deployment across every customer channel, and engineered to scale with unmatched intelligence and flexibility.

Faster time-to-build from design to deployment

Cost-effective with on-prem optimized pipeline vs APIs

multilingual support out-of-the-box - Spanish, Arabic, French, Hindi, and more

Key Features

Human-Centric Conversations

Intelligent interruption handling with multilingual fluency and regional dialect adaptation – making every interaction natural and effortless

Unified Omnichannel Experience

Seamlessly connect across channels – calls, apps, IVR, or digital platforms – ensuring consistency everywhere customers engage

Voice Cloning & Personalization

Create realistic, brand-aligned voices that adapt to your enterprise identity and customer expectations

Real-Time Responsiveness

Delivering end-to-end responses in under 600ms, enabling real-time, natural, fluid conversations

Flexible Enterprise Deployment

Built for any environment – mobile, on-prem, or cloud – with enterprise-grade portability and control

Enterprise-Grade Security & Governance

Designed with built-in governance, compliance, and enterprise security to protect data and ensure trust at scale

NetoAI’s Ethical AI Framework

(Bias Mitigation, Robustness Validation, Privacy Protection)

Comprehensive Evaluation: Thousands of prompts across categories rigorously test and ensure robust, ethical, and reliable AI performance.

Top Resources for You

TSLAM 1.5B is a telecom-specific Small Language Model (SLM) that provides a strong foundation for telecom service lifecycle automation, a domain-focused alternative to general-purpose LLMs and SLMs.